...making Linux just a little more fun!

May 2010 (#174):

- Mailbag

- Talkback

- 2-Cent Tips

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Away Mission - Upcoming in May: Citrix Synergy and Google IO, by Howard Dyckoff

- Henry's Techno-Musings: My Help System, by Henry Grebler

- Pixie Chronicles: Part 2 Bootable Linux, by Henry Grebler

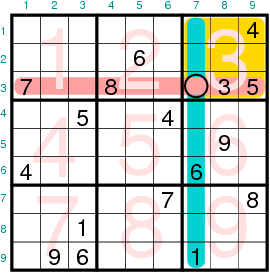

- Solving sudokus with GNU Octave (I), by Víctor Luaña and Alberto Otero-de-la-Roza

- HTML obfuscation, by Ben Okopnik

- Open Hardware and the Penguin: using Linux as an Arduino development platform, by Tom Parkin

- Hey, Researcher! Open Source Tools For You!, by Amit Kumar Saha

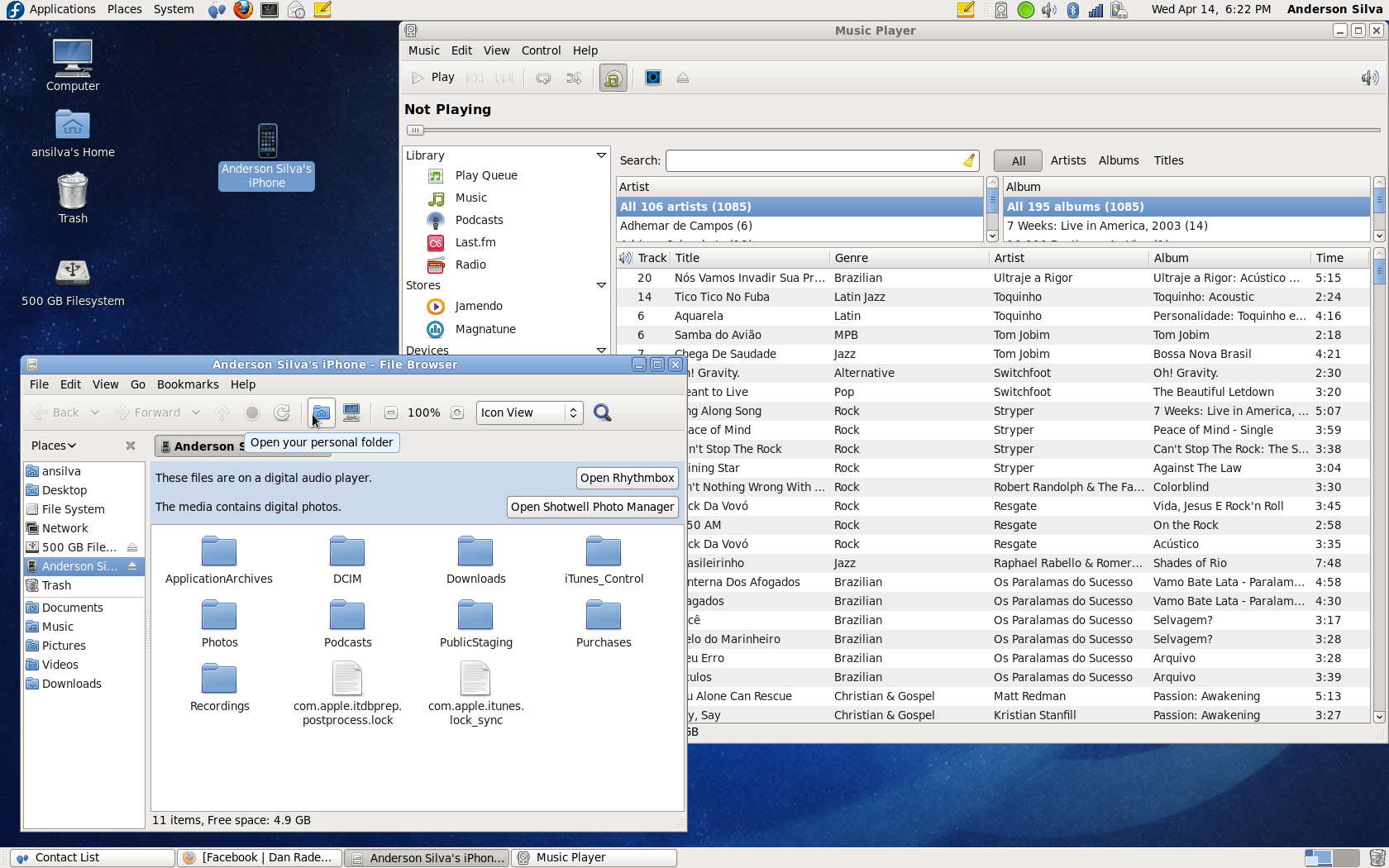

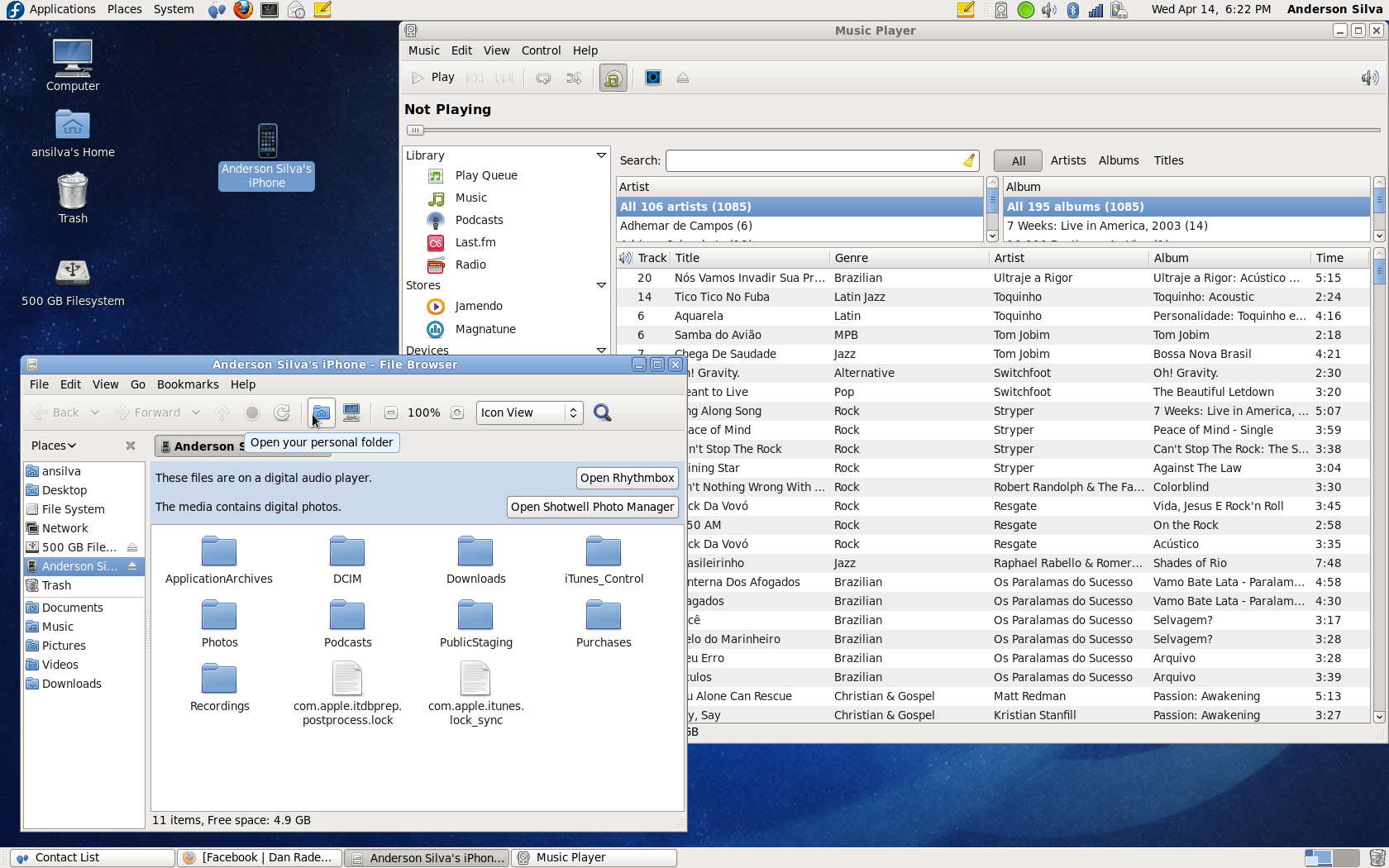

- A quick look at what's coming with Fedora 13, by Anderson Silva

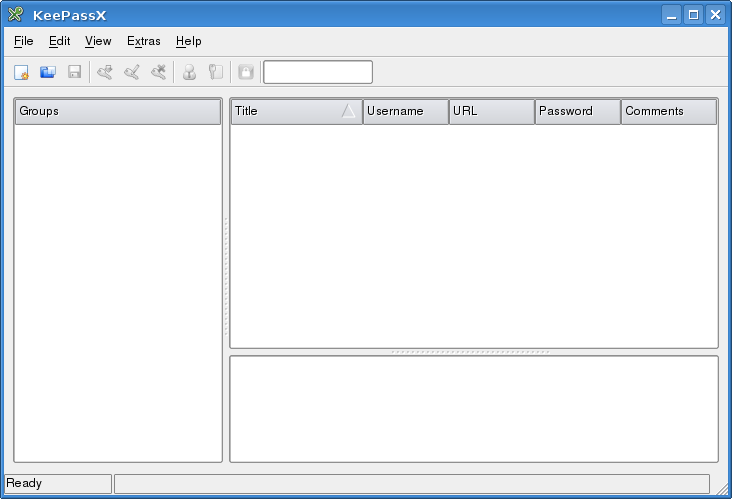

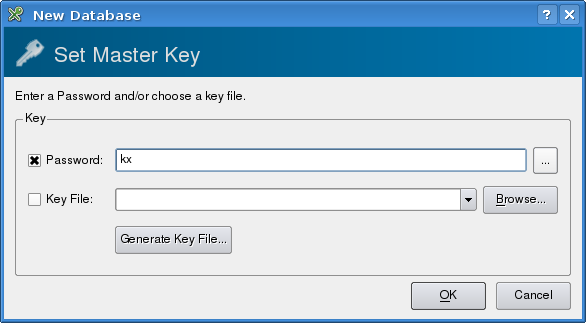

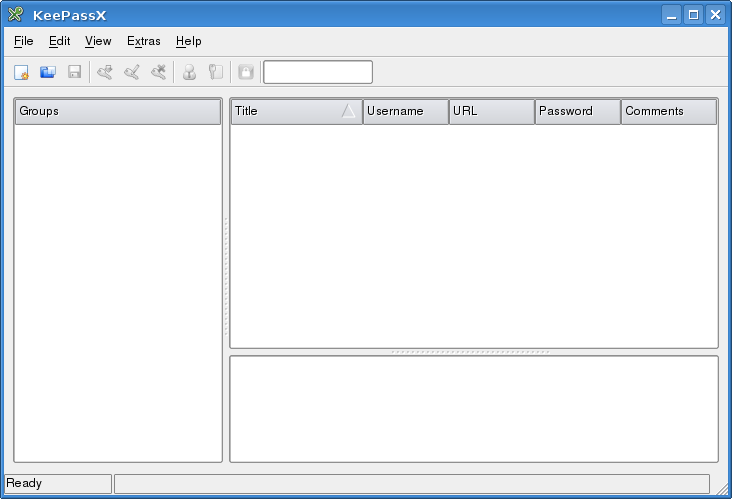

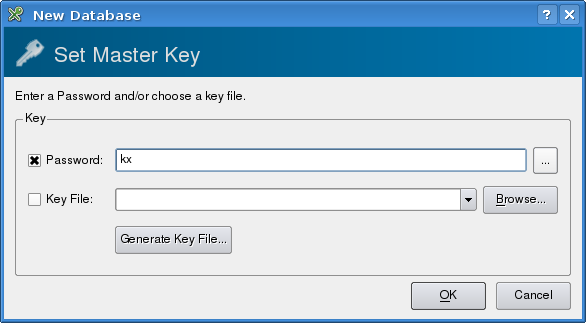

- Password Management with KeePassX, by Neil Youngman

- HelpDex, by Shane Collinge

- XKCD, by Randall Munroe

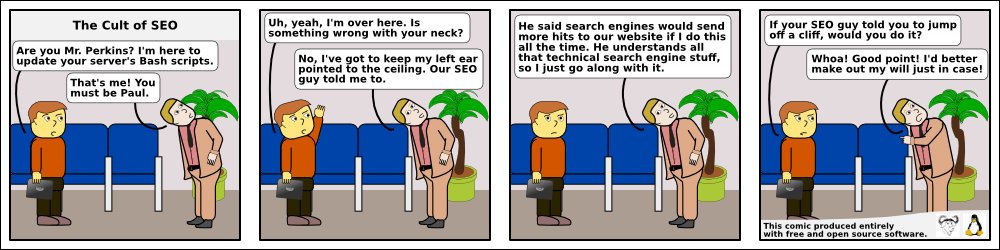

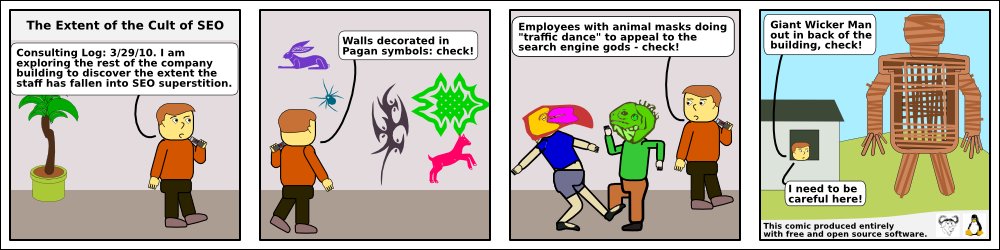

- Doomed to Obscurity, by Pete Trbovich

- The Linux Launderette

Mailbag

This month's answers created by:

[ Amit Kumar Saha, Ben Okopnik, Henry Grebler, Kapil Hari Paranjape, René Pfeiffer, Mulyadi Santosa, Neil Youngman, Aioanei Rares ]

...and you, our readers!

Our Mailbag

rsync internals

Amit Saha [amitsaha.in at gmail.com]

Thu, 1 Apr 2010 12:54:33 +0530

Hello TAG:

I have been using 'rsync' off late for backups and helping my Prof. do

the same as well.

My prof. has ~6G space left on his hard disk and we are trying to back

up everything (~200 G)to a external HDD. As 'rsync' progresses, the

disk space on the (source) hard disk gets consumed incrementally and

large files (~14G) cannot be copied to the backup hard-disk. rsycn

fails saying "No disk space left on device". I wonder why. I can try

to look into rsync source to see why this happens. But, why is rsync

consuming disk space on the source disk? Its a file copy operation

right? It should consume the main memory, not the secondary memory.

Or, is it a virtual memory thing?

Thanks for any insights!

Best Regards,

Amit

--

Journal: http://amitksaha.wordpress.com

?-blog: http://twitter.com/amitsaha

[ Thread continues here (5 messages/6.01kB) ]

compressing sparse file(s) while still maintaining their holes

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Mon, 29 Mar 2010 21:25:23 +0700

Due to certain reasons, we might have sparse file and want to compress

it. However, we want to maintain its sparseness. Can we do it the

usual way? Let's say we have this file:

$ dd if=/dev/zero of=./sparse.img bs=1K seek=400 count=0

0+0 records in

0+0 records out

0 bytes (0 B) copied, 2.3956e-05 s, 0.0 kB/s

$ ls -lsh sparse.img

4.0K -rw-rw-r-- 1 mulyadi mulyadi 400K 2010-03-29 21:14 sparse.img

$ gzip sparse.img

$ ls -lsh sparse.img.gz

8.0K -rw-rw-r-- 1 mulyadi mulyadi 443 2010-03-29 21:14 sparse.img.gz

$ gunzip sparse.img.gz

$ ls -lsh sparse.img

408K -rw-rw-r-- 1 mulyadi mulyadi 400K 2010-03-29 21:14 sparse.img

Bad. After decompression, total blocks occupied by the file "grows"

from 4KiB to 408 KiB.

The trick is by using tar with -S option:

$ tar -Sczvf sparse.img.tgz sparse.img

$ ls -lsh sparse.img.tgz

8.0K -rw-rw-r-- 1 mulyadi mulyadi 136 2010-03-29 21:18 sparse.img.tgz

$ tar -xzvf sparse.img.tgz

$ ls -lsh sparse.img

4.0K -rw-rw-r-- 1 mulyadi mulyadi 400K 2010-03-29 21:17 sparse.img

As you can see, the total block size of "sparse.img" are correctly

restored after decompression.

--

regards,

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (3 messages/3.53kB) ]

C/C++ autocompletion for Emacs/vim

Jimmy O'Regan [joregan at gmail.com]

Sat, 24 Apr 2010 12:04:21 +0100

http://cx4a.org/software/gccsense/

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

[ Thread continues here (6 messages/3.63kB) ]

Resuscitating a comatose xterm window?

Kat Tanaka Okopnik [kat at linuxgazette.net]

Sun, 18 Apr 2010 20:15:57 -0700

Hi, gang -

After a history of losing data to dying computers (yes, yes, I know,

it's really about not backing up data adequately), I've come to prefer

leaving my e-mail on remote servers rather than dealing with it locally.

As a result, my non-webmail interaction is done via ssh. I've learned to

run screen so that a dropped connection doesn't result in losing

half-composed mail, but I haven't figured out what to do with my frozen

xterm window.

What I mean by this is that I end up losing the connection, having to

reconnect, and then having to kill the frozen-by-dropped-connection

window. Is there a way that I can resuscitate the dead session in the

existing window, rather than opening up a new xterm window and killing

the old one?

Please be gentle as I expose my lack of proper terminology. I'm pretty

sure I'm not describing my problem using all the right words, but I'm

hoping I've come close enough for someone to figure out how I should

have put it. (I'd ask my resident *nix guru to vet my question, but he's

up against a deadline while running short on sleep.) I'd wait to ask my

question, but I suspect I'd forget to ask, again...

--

Kat Tanaka Okopnik

Linux Gazette Mailbag Editor

kat at linuxgazette.net

[ Thread continues here (18 messages/30.46kB) ]

how to close connected/listening socket?

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Sun, 18 Apr 2010 15:38:13 +0700

Hi all

Once I read there is a way to close a listening/half

open/connected/etc TCP socket using Linux kernel facility i.e using

one of /proc entries.

Do any of you recall which /proc (or /sys maybe?) entry that does the job?

Thanks in advance..

--

regards,

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (3 messages/2.31kB) ]

LCA: How to destroy your community

Jimmy O'Regan [joregan at gmail.com]

Tue, 20 Apr 2010 23:54:03 +0100

http://lwn.net/Articles/370157/

Great article. A co-contributor and I have been picking through it

point by point to see how many points our project is guilty of (mostly

documentation, or the lack thereof

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

[ Thread continues here (2 messages/1.49kB) ]

how to unmount stale NFS connection

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Mon, 19 Apr 2010 01:05:38 +0700

Hi all

Following my recent question regarding how to close tcp connection, it

turns out that in my case, the sockets belong to stale NFS connection.

In other words, the client try to maintain a mount toward a server

that no longer exist.

So, how to unmount it? If you use the usual umount or umount.nfs, you

can't do it and it will yield error messages like below:

$ sudo umount.nfs /media/nfs -f -v

umount.nfs: Server failed to unmount '192.168.1.2:/opt/data'

umount2: Device or resource busy

umount.nfs: /media/nfs: device is busy

Thanks to Google, I came to this URL

http://www.linuxquestions.org/linux/answ[...]_gone_down_causing_the_applications_thre

(hopefully I paste the entire URL correctly).

Essentially, the trick is to "emulate" the dead NFS server. Bring up a

virtual interface such as eth0:0 and assign it the IP address of the

dead server:

# ifconfig eth0:0 192.168.1.2 netmask 255.255.255.0

# ifconfig et0:0 up

Then retry the umount or umount.nfs command. It takes times, but

eventually it will succeed. Finally, bring down the virtual interface:

# ifconfig eth0:0 down

PS: Big credits to Rahul K. who share this valuable information.

--

regards,

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (3 messages/3.28kB) ]

Remote mail usage (Was Resuscitating a comatose xterm window?)

Kapil Hari Paranjape [kapil at imsc.res.in]

Tue, 20 Apr 2010 10:05:28 +0530

Hello,

In the context of remote access to mail,

On Mon, 19 Apr 2010, Kat Tanaka Okopnik wrote:

> I tried running a screen session locally, and then ssh to where my

> mail lives, but that resulted in some keystrokes getting interpreted in

> ways that I didn't like (e.g. the backspace key becomes something else,

> IOW not backspacing). This is probably a whole different question that

> should go under another subject line...

So I thought I should try to put together some thoughts on remote

usage of mail. Since this has nothing to do with the original

question, I have broken the thread.

Case 1: Remote system only allows webmail access

------------------------------------------------

This is unfortunately all too common nowadays. Possible solutions:

1. Live with it/abandon it entirely

2. If forwarding is allowed, then forward your mail to a "better"

server.

3. "freepops" may work for you. This is a program that acts as a

POP3 front-end to a number of webmail interfaces.

Case 2: Remote system only allows IMAP/POP access

-------------------------------------------------

This is slightly more common, yet often sub-optimal!

1. Setup your favourite mail client to access imap/pop.

2. Use one of the many programs that fetches mail from such servers.

Each of these has its own problems. In the first case, you do not get

offline access to your mail, while in the second case you might end

up paying a lot to download an attachment that you did not really

need to access right away.

Case 3: Remote system allows shell access

-----------------------------------------

This is the best case scenario that is increasingly scarce!

1. Login to system and use whatever client is locally available. In

addition you may want to run this client under "screen" or "dtach"

in order to re-use sessions in case of lost connections.

2. Filter the mail on the remote system (using procmail and friends)

to sort out the mail (optionally separating out attachments). Then

download the mail you really want to read using a number of different

techniques (rsync, unison, git and others or those mentioned in Case 2).

Clearly, Kat was using (Case 3, Para 1).

Kapil.

--

[ Thread continues here (2 messages/5.71kB) ]

VirtualBox Tricks?

clarjon1 [clarjon1 at gmail.com]

Thu, 25 Mar 2010 09:20:19 -0400

Hey Gang!

I don't know how many of you use VirtualBox (OSE or otherwise), but I

was wondering if any of you had any cool tricks-of-the trade that

perhaps readers might be able to use.

To start off with, here's one that makes VBox really easy for my mom

to use, a little something I've called the "XP Start Button":

It's essentially an icon on the desktop/menu entry. I've upgraded her

laptop to find that she's never ever had a need to boot up windows,

but unfortunately the tax guy wants her to run windows specific

software.

Anyways, here's the tip:

Create a .desktop file in a folder/desktop, and fill it with the following:

[Desktop Entry]

Encoding=UTF-8

Version=1.0

Type=Application

Terminal=false

Name[en_US]=Windows XP

Exec=VirtualBox --startvm "Windows XP"

Name=Windows XP

Of course, replacing the name of the virtual machine with the one you

wish to start on opening of the .desktop file.

Hope to hear more tips, wonder what tweaks i might learn

Sync error: ToC on Homepage and current issue page

Amit Saha [amitsaha.in at gmail.com]

Fri, 2 Apr 2010 10:36:14 +0530

Hello:

The contents for April on the homepage and on the issue page seem to

be out of sync: http://linuxgazette.net/173/index.html

Hope, I am not seeing this in the middle of a update.

-Amit

--

Journal: http://amitksaha.wordpress.com

?-blog: http://twitter.com/amitsaha

[ Thread continues here (5 messages/8.42kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 174 of Linux Gazette, May 2010

Talkback

Talkback:173/starks.html

[ In reference to "Picking Fruit" in LG#173 ]

Henry Grebler [henrygrebler at optusnet.com.au]

Mon, 05 Apr 2010 11:53:28 +1000

Hi Ken,

Great article. And you sound like a beautiful person.

But enough of this man love.

Re melons. Someone of the medical persuasion taught me that the way to

pick melons is to percuss them (see

http://en.wikipedia.org/wiki/Percussion_%28medicine%29). I am not

myself medically inclined, but I have applied this process for several

years. To use your metaphor, I certainly do not bat 1.000. I couldn't

tell you for sure how well I bat, but I'd guess up around .7 (I won't

dignify it with 3 decimal places since it could be .6). But I am

quietly confident that I bat much better these days than I used to.

I never bring home concrete blocks; the ones I bring home taste from

ok to excellent.

On the subject of HeliOS, I probably could not be further from Central

Texas. Your project captures my imagination, but at this distance I

can only offer moral support.

I have some ideas related to picking candidates for Linux conversion,

based on points raised in your article. I realise that I do not have a

complete understanding of the environment in which you find yourself,

but I respectfully offer these as possibly useful, perhaps with some

adaptation.

We all have an investment in what we know. You said it yourself:

"People get used to doing things one way." I'm writing this on a new

platform (second-hand hardware). I got fed up with Fedora when I

discovered that F10 needed a minimum of 512MB of RAM. I have a Redhat

8 firewall which until recently was running just fine on a 24MB

Pentium 1 100. So I've installed FreeBSD. My /History file indicates

that I started installing 17 January 2010. While I was building I

still had my old Fedora machine. Eventually (16 March 2010), I decided

to bite the bullet and move to FreeBSD.

But I'm stilling running my old Fedora machine. I've got at least a

dozen xterm sessions SSHed into it. I keep discovering things I want

to be able to do, things I can do on the Fedora box that I can't yet

do on my FreeBSD box. Gradually I am installing the software I need.

And, boy, am I glad that I had the safety net. Recently, I rebooted my

FreeBSD machine because I'd installed quite a lot of software and I

wanted to be sure it would still work after a reboot - and it didn't!

In the event, it was only off the air for a day. But I know that from

the perspective of today looking backwards. Faced with a machine hung

somewhere in the boot process, my first reaction was panic. And

terror.

Further, without the ability to fallback to my other machine, I would

not have been able to get onto the forums which eventually gave me a

pointer about how to work around my problem.

Perhaps some of the prospects for conversion to Linux need the safety

net of being able to go back to the familiar environment of Windows

until they can be weaned off.

[ ... ]

[ Thread continues here (1 message/6.01kB) ]

Talkback:171/nonstyleguide.html

[ In reference to "Words, Words, Words" in LG#171 ]

Henry Grebler [henrygrebler at optusnet.com.au]

Tue, 06 Apr 2010 10:32:43 +1000

Hi Gang,

In his article, "Words, Words, Words", Rick complained about

"Levitz is having a sale at their Oakland warehouse."

I was going to write explaining Rick's objection. But that would be

naughty. Instead, I'll explain mine.

The sentence uses number inconsistently.

I don't care if "Levitz" is singular or plural - so long as it is only

one, not both - especially within a single sentence. It defies logic

for an entity to be simultaneously singular and plural.

So, I'm ok with either of these:

"Levitz is having a sale at its Oakland warehouse."

"Levitz are having a sale at their Oakland warehouse."

Cheers,

Henry

Talkback: Discuss this article with The Answer Gang

Published in Issue 174 of Linux Gazette, May 2010

2-Cent Tips

[2 cent tips] Better reboot using magic SysRq

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Tue, 27 Apr 2010 07:26:47 +0700

What is a better way to reboot your system in case it hangs? I mean

rather than three finger salute or kicking the power switch?

Let's assume you have enable the magic SysRq before. Make sure this

feature is compiled in the kernel (CONFIG_MAGIC_SYSRQ) and you enable

it (echo 1 > /proc/sys/kernel/sysrq). Then when the disaster strike,

you can try pressing these key combos in the following order:

1. Alt-SyRq-s (sync the buffer with the disk)

2. Alt-SysRq-u (remount all filesystem as read-only)

3. Alt-SysRq-e (kill all tasks, except init)

4. Alt-SysRq-b (finally, reboot system)

If your keyboard doesn't have SysRq, use PrintScreen instead. The way

I do it is like this: press alt, hold, press sysrq, hold, then press s

(or other key), then release them all. You might also try, press alt,

hold, press sysrq, release it, press s or other key, release all.

In most cases, the above sequence could reboot your system better. But

if not, just fall back to the "traditional" one

Reference: sysrq.txt in Documentation directory inside kernel source

code's tree.

--

regards,

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (5 messages/3.52kB) ]

2-cent Tip: Screen Shots

Ben Okopnik [ben at linuxgazette.net]

Tue, 13 Apr 2010 15:56:07 -0400

----- Forwarded message from Henry Grebler <henrygrebler at optusnet.com.au> -----

I was writing an article. I needed more than words; I needed screen

shots. Over the years, I've done this in different ways. Of late, I've

used ImageMagick(1) for this sort of work, in particular,

import - saves any visible window on an X server and outputs

it as an image file. You can capture a single window, the

entire screen, or any rectangular portion of the screen.

I was capturing parts of web pages. Then I came to a roadblock. I

clicked on a button and it produced a drop-down list. I wanted to

capture the contents of the drop-down list. But, some drop-down lists

are unfriendly. For example, in Firefox, if I click on File, the usual

drop-down list appears, but now my keyboard is deactivated. I also

lose most mouse functions, except for moving the cursor. And, if I

click anywhere, the drop-down list disappears.

So how to take the screen shot?

I started up a vncserver and a vncviewer (client); and then invoked

Firefox inside the VNC client.

Click on File, move the cursor out of the VNC client, and, voila - I

can now use import to take a screen shot of the menu of the Firefox

running in vncviewer.

----- End forwarded message -----

[ Thread continues here (3 messages/5.74kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 174 of Linux Gazette, May 2010

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to bytes@linuxgazette.net. Deividson can also be reached via twitter.

News in General

Utah Jury Confirms Novell Owns Unix Copyrights

Utah Jury Confirms Novell Owns Unix Copyrights

At the end of March, the jury for the District Court of Utah trial

between SCO Group and Novell issued a verdict determining the

ownership of important Unix copyrights.

The jury's decision confirmed Novell's ownership of the Unix

copyrights, which SCO had asserted to own in its attack on Linux.

Novell stated that it remains committed to promoting Linux, including

by defending Linux on the intellectual property front.

Ron Hovsepian, Novell president and CEO, said "This decision is good

news for Novell, for Linux, and for the open source community. We have

long contended that this effort against Linux has no foundation, and

we are pleased that the jury, in a unanimous decision, agrees. I am

proud of Novell's role in protecting the best interests of Linux and

the open source community."

SCO is reported as currently weighing an appeal and it may also

continue its related lawsuit against IBM for both Unix infringement

and some contract conflicts.

There is a little more on the tail end of this saga. From

http://www.groklaw.com:

"The SCO bankruptcy hearing on the sale of the Java patent will be on

April 20. That's this proposed sale to Liberty Lane for $100,000, and

that's an LLC affiliated with Allied Security Trust, the

anti-patent-trolls company, if you've dropped a stitch and can't keep

up as SCO's assets get sold off bit by bit. If anyone else has bid,

other than Liberty Lane, then there would be an auction on the 19th,

but SCO told the court they don't expect that to happen."

HP ranked 10th in FORTUNE 500 List

HP ranked 10th in FORTUNE 500 List

HP has entered the coveted top 10 category of the Fortune 500 list.

IBM came in at 20th, Microsoft at 36th, Dell at 38th, and Apple 56th,

just ahead of Cisco at 58th. Amazon.com was 100th with $24 B in

revenue. Oracle was 105th at $23 B, but Sun was separately listed at

204th with 11 B in revenue. Combined with Sun's revenue, the new

Oracle would be competing with Apple and Cisco for their rankings in

the mid-50s of the list.

See the full list here:

http://money.cnn.com/magazines/fortune/fortune500/2010/.

MySQL Advisory Board Meetings set for May

MySQL Advisory Board Meetings set for May

Oracle has announced that the MySQL Customer Advisory Board meetings

will take place on May 6, 2010 in Amsterdam and June 3, 2010 in

Redwood Shores, Calif. The meetings will serve as a forum for MySQL

customers to learn about new enhancements to the MySQL database, and

provide feedback. The Storage Engine Advisory Board will focus on

MySQL's storage engine API, and its future.

The announcement coincided with the recent MySQL User Conference which

will remain separate from the annual Oracle OpenWorld, currently being

held in San Francisco this September.

Also, Oracle Announced MySQL Cluster 7.1, which introduces MySQL

Cluster Manager, a new solution that simplifies and automates the

management of the MySQL Cluster database. More details are in the

Products section of News Bytes, below.

Ubuntu 10.04 Long Term Support (LTS) Server Reaches for the Clouds

Ubuntu 10.04 Long Term Support (LTS) Server Reaches for the Clouds

Canonical has released Ubuntu 10.04 LTS Server Edition with extended

security and maintenance updates to all users for five years. Ubuntu

10.04 LTS extends the cloud-computing capability of Ubuntu Enterprise

Cloud with Eucalyptus, an open source cloud management tool for

private computing clouds.

More info in the Distro section of News Bytes, below.

Synaptics' Advanced Gesture UI Moves To Linux Operating Systems

Synaptics' Advanced Gesture UI Moves To Linux Operating Systems

In April, Synaptics announced the extension of its industry-leading

Synaptics Gesture Suite to the Linux operating system environment.

This release extends the Synaptics Gesture Suite - which includes

sophisticated multi-finger gestures - to OEMs that offer Linux-based

solutions.

Synaptics has a strong leadership position in the Microsoft

Windows-based TouchPad market and Synaptics Gesture Suite (SGS). Today's

announcement extends the industry's broadest gesture suite across a wide

range of leading Linux operating systems.

"The Synaptics Gesture Suite for Linux enables our OEMs to leverage a

broad range of gesture capabilities across Linux operating systems,

and offers extensibility into new Linux flavors such as Google Chrome

OS and additional support for touch-enabled remote control devices,"

said Ted Theocheung, head of Synaptics PC and digital home products

ecosystem. "SGS ensures optimized interoperability of gestures,

minimal gesture interpretation errors, and proven usability

performance across the widest range of TouchPad sizes."

Supported Linux operating systems include Fedora, Millos Linpus, Red

Flag, SLED 11 (SuSE), Ubuntu, and Xandros. SGS for Linux (SGS-L)

supports a wide range of pointing enhancements and gestures including

two-finger scrolling, PinchZoom, TwistRotate, PivotRotate,

three-finger flick, three-finger press, Momentum, and ChiralScrolling.

Bundled with Synaptics' enhanced driver interface, SGS-L is provided

free of charge to Synaptics OEM/ODM partners when ordered with

Synaptics TouchPad and ClickPad products.

Synaptics Gesture Suite for Linux (SGS-L) helps manufacturers bring

new interactivity and productivity to their notebook PC systems and

other peripheral devices that use Synaptics TouchPads. To find out

more about Synaptics Gesture Suite for Linux, please visit:

http://www.synaptics.com/go/SGSL.

Gnome and KDE will co-host 2011 Dev Conference

Gnome and KDE will co-host 2011 Dev Conference

Following the successful Gran Canaria Desktop Summit in 2009, the

GNOME Foundation and KDE e.V. Boards have decided to co-locate their

flagship conferences once again in 2011, and are taking bids to host

the combined event. Desktop Summit 2011 plans to be the largest free

desktop software event ever.

In July of 2009, the GNOME and KDE communities came together for the

Gran Canaria Desktop Summit, the first co-located KDE/GNOME event. It

was a major success and an opportunity for the leaders of the free

software desktop efforts to communicate on common issues and attend

combined social events. The attendees from both projects expressed

great interest in repeating the event and merging the programs to

synchronize schedules and make the event an even greater opportunity

for the KDE and GNOME teams to learn from each other and work

together.

"The Gran Canaria Desktop Summit was a great first event," said

Vincent Untz, GNOME Foundation Board Member. "We enjoyed working with

our KDE friends at GCDS in 2009, and want to increase our cooperation

in 2011. We plan to go beyond simple co-location this time, and

actually plan a combined schedule in 2011 so that KDE and GNOME

contributors have every opportunity to work with and learn from each

other."

The GNOME and KDE projects will hold independent events in 2010.

GUADEC, the GNOME Project's annual conference, will be held in The

Hague, Netherlands on July 24 through July 30 of this year. KDE's

Akademy will be located in Tampere, Finland from July 3 to 10 this

year. Both groups will likely hold smaller sprints through 2010 and

early 2011 to prepare for the combined 2011 Desktop Summit.

"The KDE e.V. board felt that GCDS was a fantastic event, and we

learned what works well and what can be improved when co-hosting an

event with our GNOME friends," said Cornelius Schumacher of the KDE

e.V.

More than 850 contributors to the GNOME and KDE projects gathered in

Gran Canaria last July. The event brought together attendees from 50

countries, and helped raise local awareness of free software and had a

measurable impact on the local community. The impact of the event

continues with nearly 2 million hits to the summit Web site following

the event.

The projects are seeking a host in Europe at a location that can

handle more than 1,000 participants. For detailed requirements,

prospective hosts can see the requirements for Akademy

(http://ev.kde.org/akademy/requirements.php) and GUADEC

(http://live.gnome.org/GuadecPlanningHowTo/CheckList). Applications

are welcome before May 15th, and should be sent to the KDE e.V.

(kde-ev-board@kde.org) and the GNOME Foundation (board@gnome.org)

boards.

Conferences and Events

- IBM Impact 2010 Conference

-

May 2-7, Las Vegas, Nev.

IDN Promotion Code: IMP10IDN

http://www-01.ibm.com/software/websphere/events/impact/index.html.

- Citrix Synergy-SF

-

May 12-14, 2010. San Francisco, Ca

http://www.citrix.com/lang/English/events.asp.

- Apache Lucene EuroCon 2010

-

May 18-21, Prague, Czech Republic

http://lucene-eurocon.org/.

- Google IO Developer Conference - now closed

-

May 19 - 20, Moscone Center, San Francisco, CA

http://code.google.com/events/io/2010/.

- Black Hat Abu Dhabi 2010

-

May 30 - Jun 2, Abu Dhabi, United Arab Emirates

http://www.blackhat.com/html/bh-ad-10/bh-ad-10-home.html.

- Internet Week New York

-

June 7-14, 2010, New York, New York

http://internetweekny.com/.

- Southeast Linuxfest (SELF 2010)

-

June 12-13, Spartanburg, SC

The Southeast LinuxFest is a community event for anyone who wants to

learn more about Linux and Free & Open Source software. It is part

educational conference, and part social gathering. Like Linux itself,

it is shared with attendees of all skill levels to communicate tips and

ideas, and to benefit all who use Linux/Free and Open Source Software.

The Southeast LinuxFest is the place to learn, to make new friends, to

network with new business partners, and most importantly, to have fun!

Register today (FREE to attend)! Book your hotel room now and save!

http://www.southeastlinuxfest.org/registration/form

http://www.southeastlinuxfest.org

- Über Conf 2010

-

June 14-17, Denver, CO

http://uberconf.com/conference/denver/2010/06/home.

- Semantic Technology Conference

-

June 21-25, Hilton Union Sq., San Francisco, CA

http://www.semantic-conference.com/.

- O'Reilly Velocity Conference

-

June 22-24, 2010, Santa Clara, CA

http://en.oreilly.com/velocity.

- USENIX Conference on Web Application Development (WebApps '10)

-

June 23-24, Boston, MA

Join us for the USENIX Conference on Web Application Development

(WebApps '10) which will take place June 23-24, 2010, in Boston, MA,

as part of the USENIX Federated Conferences Week.

WebApps '10 is a new technical conference filled with the latest

research in all aspects of developing and deploying Web applications.

The diverse 2-day conference program will include invited talks by

industry leaders including Adam de Boor, Google, on "Gmail: Past,

Present, and Future," a variety of topics and new techniques in the

paper presentations, WiPs, and a poster session. Since WebApps '10 is

part of USENIX Federated Conferences Week, you'll also have increased

opportunities for interaction and synergy across multiple disciplines.

Register by June 1 and save up to $400! Additional discounts are available.

http://www.usenix.org/webapps10/lg

http://www.usenix.org/webapps10/lg

- 2010 USENIX Annual Technical Conference (USENIX ATC '10)

-

June 23-25, Boston, MA

Join us for the 2010 USENIX Annual Technical Conference (USENIX ATC

'10) which will take place June 23-25, 2010, in Boston, MA, as part of

the USENIX Federated Conferences Week.

Don't miss the 3-day conference program filled with the latest

innovative work in systems research, including invited talks by Ivan

Sutherland on "Some Thoughts About Concurrency," Ben Fry on

"Visualizing Data," and Matt Welsh on "RoboBees." Find out about the

latest in groundbreaking systems research in the paper presentations,

WiPs, and a poster session. Since USENIX ATC '10 is part of USENIX

Federated Conferences Week, you'll also have increased opportunities

to network with peers across multiple disciplines.

Register by June 1 and save up to $400! Additional discounts are available.

http://www.usenix.org/atc10/lg

http://www.usenix.org/atc10/lg

- CiscoLive! 2010

-

June 27-July 1, Mandalay Bay, Las Vegas, NV

http://www.ciscolive.com/attendees/activities/.

- GUADEC 2010

-

July 24-30, 2010, The Hague, Netherlands

http://www.guadec.org/.

- Black Hat USA

-

July 24-27, Caesars Palace, Las Vegas, Nev.

http://www.blackhat.com/html/bh-us-10/bh-us-10-home.html.

- LinuxCon 2010

-

August 10-12, 2010, Renaissance Waterfront, Boston, MA

http://events.linuxfoundation.org/events/linuxcon.

- USENIX Security '10

-

August 11-13, Washington, DC

http://usenix.com/events/sec10.

- LinuxCon Japan 2010

-

September 27-29, Roppongi Academy, Tokyo, Japan

http://events.linuxfoundation.org/events/linuxcon-japan/.

Distro News

Ubuntu 10.04 Long Term Support (LTS) Server Reaches for the Clouds

Ubuntu 10.04 Long Term Support (LTS) Server Reaches for the Clouds

Canonical has released Ubuntu 10.04 LTS Server Edition with extended

security and maintenance updates free of charge to all users for five

years (versus 18 months for a standard release). The third and latest

LTS version of the popular Linux desktop distribution, Ubuntu 10.04

LTS delivers on the standing commitment to release a version of Ubuntu

every two years and builds on the success of Ubuntu 8.04 LTS. It has

been available for free download since April 29th.

Ubuntu 10.04 extends the cloud-computing capability of Ubuntu

Enterprise Cloud with Eucalyptus - a technology that is becoming

widely used as a basis for building private clouds. Ubuntu 10.04 LTS

also includes 20 major packages added or updated since the previous

LTS release, giving new and upgrading users a wide range of

applications that can be easily installed at launch.

Over 75 organizations have signaled their intent to certify

applications on the platform including Alfresco, Ingres, IBM, VMware,

Zimbra, Yahoo and many others with more expected to follow

post-launch. Dell has announced its intention to support Ubuntu 10.04

LTS Server Edition and will offer Ubuntu Enterprise Cloud as an option

on their PowerEdge-C product line - servers specifically designed for

building cloud environments. Intel has also been assessing Ubuntu

Enterprise Cloud and jointly published a white-paper with Canonical on

how to best deploy the technology on Intel's latest Xeon processors.

New features in Ubuntu 10.04 LTS Server Edition:

* Direct upgrade path for Ubuntu 8.04 LTS and Ubuntu 9.10 users;

* Stability and security enhancements for LTS including:

-- Five years of security and maintenance updates free to all

users;

-- AppArmor security by default on key packages;

-- Kernel hardening (memory protection, module loading

blocking, address space layout randomization);

-- Encrypted Home and Private directories;

* Virtualization enhancements including:

-- KVM now support KSM memory aggregation and live migration

of virtual machines;

-- Automated and fast image creations with VMBuilder;

-- Ubuntu as a Virtual Machine (VM) now supported for VMWare,

Xen, KVM, Virtualbox, EC2 and UEC;

* Ubuntu Enterprise Cloud (UEC) and Amazon EC2 enhancements

including:

-- EC2 and UEC images are included in five years of free

security and support updates;

-- Minimal installation profile for minimum footprint VMs

optimized for EC2;

-- Ubuntu EC2 images can be booted from EBS ;

-- Easily customized images at boot time for super

flexibility using cloud-init;

-- Puppet configuration management framework can be used to

mass control instances from their start.

* Advanced storage capabilities, including Raid and iSCSI and

multipath support;

* Simpler to mass deploy and manage:

-- Puppet integration from the installer and in the cloud.

-- Version control changes (integrated with Puppet) provides

history and accountability.

-- Many new and improved installation profiles.

Linux Gazette spoke with Nick Barcet, Ubuntu Server Product Manager,

and Gerry Carr, Platform Marketing Manager, just before 10.4 was

released.

Carr said that this was the third release of Ubuntu Enterprise Cloud

(UEC) and first on an LTS release. "This is a significant upgrade,"

Carr told LG. "This is well-positioned to take us toward more

mission-critical deployments in the data center."

"This release will also show a significant shift in ISP support,"

Carr said, "We expect about 75 ISVs will declare support for 10.04

Server when released and more will follow. ...This is more than

doubling the number of ISVs supporting Ubuntu."

Barcet explained that many IT depts are experimenting with Cloud

architectures and seem to considering UEC in hybrid public and private

architectures. There are now about 200 UEC deployments a day and these

are approaching 11,000 total deployments, he said.

Ubuntu 10.10 is scheduled for release in October and Ubuntu 11 is

scheduled for the Spring of 2011. The next LTS release will be in

2012.

Ubuntu 10.04 LTS Server Edition, including Ubuntu Enterprise Cloud, is

widely available for free download from http://www.ubuntu.com Users

can upgrade directly to Ubuntu 10.04 LTS from the previous Ubuntu 8.04

LTS version as well as the Ubuntu 9.10 release.

Ubuntu 10.04 LTS Desktop has a new look and social network ties

Ubuntu 10.04 LTS Desktop has a new look and social network ties

The new desktop edition of Ubuntu 10.04 LTS will feature extensive

design work, a nimbler UI, faster boot speed, social network

integration, online services and the Ubuntu One Music Store.

Ubuntu 10.04 LTS Desktop also includes three years of support through

free security and maintenance updates.

"Ubuntu 10.04 LTS challenges the perceptions of the Linux desktop,

bringing a whole new category of users to the world of Ubuntu," said

Jane Silber, CEO, Canonical. "Changes like the new look and feel and

the addition of a music store, layered on top of our relentless focus

on delivering an intuitive and attractive user experience for new and

existing Ubuntu users -- these are the bridging elements to the

mainstream market that our community, our partners and our users

really want. Long-term support makes Ubuntu 10.04 LTS very attractive

to corporate IT as well."

New in 10.04 LTS:

* Boot speed: Noticeably quicker on almost any machine.

* Social from the start: The new 'Me Menu' in Ubuntu 10.04 LTS

consolidates the process of accessing and updating social

networks including Facebook, Digg, Twitter and Identi.ca. The Me

Menu also integrates chat channels.

* Ubuntu One: Enhanced desktop integration for the online service

means files and folders and bookmarks can be shared and saved on

the cloud more easily.

* Ubuntu One Music Store: Music from the world's largest labels

and greatest bands available direct to Ubuntu users through the

default music player. Purchase tracks, store in Ubuntu One and

share DRM-free music from one location across multiple computers

and devices.

* Ubuntu Software Centre 2.0: An easy way to find new software,

and keep track of it once it's installed.

* Ubuntu 10.04 Netbook Edition (UNE): As well as benefiting from

the improvements in the Desktop Edition, netbook users will see

even faster boot speeds on SSD-based devices, and faster

suspend/resume that will extend battery life.

Ubuntu 10.04 LTS Desktop Edition and Ubuntu 10.04 Netbook Edition are

entirely free of charge and are available for download from

http://www.ubuntu.com.

Fedora 13 "Goddard" Beta aims to be a rocket

Fedora 13 "Goddard" Beta aims to be a rocket

The Beta release of Fedora 13 (codename "Goddard") blasted off

mid-April, named for scientist and liquid-fuel rocketry pioneer Robert

Goddard. Only critical bug fixes will be pushed as updates for the

general release of Fedora 13, scheduled to be released in the middle

of May.

The available Live images make it easy to try out Fedora - if you

write one to a USB key, you can add personal data and your favorite

applications as you go.

Here are some of the changes that are propelling the Fedora 13 Beta:

* The goal in Goddard is to automate some of the hardware and

software tasks that help users get their work done. When the user

plugs in a USB or parallel port printer, inserts a specialized CD

such as collections of music files as opposed to a standard audio

CD, or downloads or opens an archive file, using powerful back end

desktop technologies, PackageKit is designed to detect the user's

action and offer to install software helpers.

* The Fedora 13 Beta allows developers to install and try a

parallel-installable Python 3 stack for the first time. This

feature allows developers to write and test code using either the

current Python 2.6 or the next-generation Python 3 language.

* There are new functions in Fedora 13 Beta for the GNU debugger,

gdb, that allow it to deliver unified information for C/C++

libraries and Python in the same running process. Programmers who

are writing Python code that wraps or calls C/C++ functions to

enhance performance and rapid development can now more quickly and

efficiently detect and debug problems in their code using this

work.

* System administrators can also try out some of the advanced file

system improvements in Fedora 13 Beta. New features in the btrfs

file system allow for rollbacks of entire file system states,

making application testing and system recovery more powerful than

ever.

* During its development cycle, Fedora 13 also featured for the

first time an installable version of Zarafa, a drop-in groupware

replacement for Exchange with full featured email, calendaring,

and other collaboration tools for use by both Linux and Microsoft

clients. A usable and familiar Web interface for users, and

support for POP/IMAP and other protocols, is included along with

tools for integration with existing Linux services.

To try Fedora 13 Beta in both 32-bit and 64-bit versions, visit

http://fedoraproject.org/get-prerelease.

Mepis antiX 8.5 Released

Mepis antiX 8.5 Released

April saw the release of antiX MEPIS 8.5, a lightweight MEPIS variant

designed for older computers. Nine months after the release of antiX

M8.2, antiX MEPIS 8.5 - a fast, light, flexible and complete desktop

and live CD based on SimplyMEPIS and Debian 'testing' - is now

available in full and base versions.

This release defaults to a fully customised IceWM desktop (Fluxbox,

wmii and dwm are also installed) using a SimplyMEPIS 2.6.32 kernel,

tweaked MEPIS Assistants for better compatibility in antiX and a range

of applications for desktop use, including Iceape for Internet needs,

AbiWord and Gnumeric for office use, XMMS and Google's music manager

for audio, gxine, MPlayer and GNOME MPlayer for video.

This antiX release works on computers with as little as 64 MB RAM,

though 128MB RAM is the recommended minimum, and the 486 kernel

version should work on AMD K5/K6.

Download antiX 8.5 here:

http://ftp.cc.uoc.gr/mirrors/linux/mepis/released/antix/.

Software and Product News

Xen.org Releases Xen 4.0 Hypervisor for the Cloud

Xen.org Releases Xen 4.0 Hypervisor for the Cloud

Xen.org, the home of the open source Xen hypervisor, has released Xen

4.0, a collective effort of a global development team representing

more than 50 leading technology vendors, universities, and

virtualization experts. Leveraging the latest network cards optimized

for virtualization, Xen 4.0 provides users substantial performance and

scalability gains for any level of enterprise or cloud application

workload.

Xen 4.0 adds significant memory and security optimizations that will

drive virtualization and infrastructure performance. As a result,

virtualization is made suitable for all workloads, even network

intensive and high performance computing applications that would have

previously experienced compromised performance on any hypervisor. Xen

4.0 enables virtualization to be deployed ubiquitously, across every

server in a datacenter, bringing ease of management, secure

architecture, high availability, agility and efficiency to all

applications.

Key Highlights in Xen 4.0:

- Fault Tolerance - Xen 4.0 now supports live transactional

synchronization of VM states between physical servers as a basic

component, enabling administrators to guarantee a high degree of

service reliability.

- High Availability - Xen 4.0 leverages advanced reliability,

availability and serviceability (RAS) features in new Intel Xeon

(Nehalem-EX) and AMD Opteron processors.

- Netchannel2 - Xen 4.0's NetChannel2 takes advantage of significant

advancements in networking hardware such as SMART NICs with

multi-queue and SR-IOV functionality.

- Blktap2 - A new virtual hard disk (VHD) implementation in Xen 4.0

delivers high performance VM snapshots and cloning features as well as

the ability to do live virtual disk snapshots without stopping a VM

process.

- PVOps Domain 0 - Xen 4.0 is the first release from Xen.org to

formally support PVOps in the Domain0 (Dom0) Linux kernel; this new

kernel option allows administrators access to the most recent devices

supported by the Linux kernel.

- Memory Enhancements - New algorithms, such as Transcendent Memory

and Page Sharing have been introduced in Xen 4.0 to enhance the

performance and capabilities of hypervisor memory operations.

A full list of features can be found on the Xen Community web site:

http://xen.org.

GNOME Project Updates Free Desktop with 2.30 Release

GNOME Project Updates Free Desktop with 2.30 Release

On March 31st, the GNOME Project celebrated the release of GNOME 2.30,

the latest version of the popular, multi-platform free desktop

environment and developer platform. Released on schedule, GNOME 2.30

builds on top of a long series of successful six month releases,

including enhancements for user management, Web browsing, support for

Facebook chat, and new productivity features.

GNOME 2.30 also marks the last major release in the GNOME 2.x era.

There will be maintenance releases for 2.30, but the community now

focuses its efforts on GNOME 3.0.

The 2.30 release contains significant user-visible improvements,

adding numerous platform improvements for developers, and builds

towards the upcoming GNOME 3.0 release with a preview of the

revolutionary GNOME Shell. GNOME Shell, which will replace the

existing GNOME Panel, changes the way users will interact with the

desktop.

Also, this release includes a number of improvements in GNOME's Orca

Screen Reader that improve performance and use on netbooks, and

platform improvements to ready GNOME's Accessibility interface for

GNOME 3.0. The 2.30 release also delivers comprehensive

support for more than 50 languages, and partial support for many

others.

"I'm really pleased with all of the updates in GNOME 2.30," said

Stormy Peters, GNOME Executive Director. "I'm excited that I can

automatically sync my Tomboy notes between my desktop and laptop

computer, easily configure Facebook chat in Empathy instant messenger,

and do more with PDFs in Evince. GNOME 2.30 provides everything I need

for work and play."

GNOME 2.30 includes updates to Nautilus, the GNOME File Manager,

including a new split view mode and is now set to browser mode by

default, replacing spatial mode.

GNOME System Tools now works with PolicyKit for authentication,

removing the Unlock button for managing users and services. The

dialogue to create a user has been improved and only requires you to

provide a the new user's name and will include user name suggestions.

Upon account creation everything will "just work". This also includes

support for encrypted home directories.

Vinagre, a remote desktop client for the GNOME Desktop, can now access

to a remote machine more securely through an SSH tunnel. This needs an

SSH account on the remote client.

Connecting to clients using a low bandwidth connection is eased by

allowing you to choose a lower depth of colour to save bandwidth and

by enabling JPEG compression. These options are available in the

connection dialogue in Vinagre.

You'll find detailed information about GNOME 2.30 in the release

notes: http://library.gnome.org/misc/release-notes/2.30/.

Oracle Announces MySQL Cluster 7.1

Oracle Announces MySQL Cluster 7.1

Demonstrating its investment in MySQL development, Oracle announced

the latest release of MySQL Cluster at the MySQL User Conference in

April.

MySQL Cluster 7.1 introduces MySQL Cluster Manager to automate the

management of the MySQL Cluster database. In addition, the new MySQL

Cluster Connector for Java enables higher throughput and lower latency

for Java-based services.

MySQL Cluster is a leading "real-time" relational database with no

single point of failure. By providing very high availability and

predictable millisecond response times, MySQL Cluster allows telecom

and embedded users to meet demanding real-time application

requirements.

With support for in-memory and disk-based data, automatic data

partitioning with load balancing, and the ability to add nodes to a

running cluster with zero downtime, MySQL Cluster 7.1 delivers

enhanced database scalability to handle unpredictable workloads.

MySQL Cluster 7.1 includes the following key features:

* MySQL Cluster Manager - allows users to manage a cluster of many

nodes.

* NDBINFO - presents real-time status and usage statistics from the

MySQL Cluster Data Nodes as SQL tables and views, providing developers

and administrators a simple and consistent means of proactively

monitoring and optimizing database performance and availability.

* MySQL Cluster Connector for Java - allows developers to write Java

applications that can use JDBC or JPA to communicate directly with

MySQL Cluster.

MySQL Cluster 7.1 can be downloaded today at http://dev.mysql.com/.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Away Mission - Upcoming in May: Citrix Synergy and Google IO

By Howard Dyckoff

This year, Synergy plays in San Francisco, in the neighborhood of Silicon

Valley and both VMware and Oracle (and also formerly independent Sun

Microsystems.) Last year it was in Vegas, with keynotes available via Web

streaming.

Synergy has become a new Virtualization destination since Citrix acquired

the primary sponsorship of the Xen virtuallization platform from XenSource,

Inc, in October 2007. It is also slowly developing Open Source tendrils.

It was only last year, a few months after Synergy 2009, that Simon Crosby,

Citrix's CTO of Virtualization and Management, said, "XenServer is 100%

free, and also shortly fully open sourced. There is no revenue from it at

all." A further shift in the direction of Open Source is expected at

May's event.

Synergy will also offer sessions on VDI - virtual desktop infrastructure.

This seems follow along with Cloud Computing and the new internet-based

terminal devices: smart phones and netbooks. We may be seeing the

re-invention of the Mainframe.

If you’ve attended a previous Synergy conference, you’re entitled to a

discount of $600 off the regular registration price. That would be only

$1,295 and there is no deadline on that price. Other discounts are based on

early registration.

At the Synergy Testing Center you can take a Citrix exam of your choice for

free. This can help you pursue a Citrix Certification, including the CCEE

and CCIA for Virtualization. The A15 and A16 beta exams are now available

exclusively to Synergy San Francisco attendees.

Citrix Synergy-SF May 12-14, 2010. San Francisco, CA

http://www.citrix.com/lang/English/events.asp

I would also recommend Google I/O 2010; unfortunately, it is closed to

new registrations. It filled up within 2 or 3 weeks of registration

opening.

The 2010 web developer conference has expanded to include a BootCamp day

before the main event, but you have to be already registered for Google IO

to gain entrance to the BootCamp. These sessions assume you have no

experience working with Google technologies and will be lead by Google

developers.

Google I/O features 80 sessions, more than 3,000 developers, and over 100

demonstrations from developers showcasing their technologies.

The word must have gotten out that, besides a well organized conference and

great grub, Google bestowed unlocked G-phones on all developer attendees

last year. That's a big perk and some of these showed on eBay for over

$500 each. Which meant several attendees got their fees covered

because...

The cost last year and this year was fairly amazing. Early bird

registration fees was $400 USD through April 16th, 2010. If there were

space available after April 16th they would have been $500 USD. The

Academia (students, professors, faculty/staff) registration fee was $100

USD. Such a bargain!

Of course, with the Iceland eruptions, some Euro guests may have to cancel.

Send inquiries to googio2010@gmail.com and follow on twitter @googleio.

Google IO Developer Conference (now closed): May 19 - 20, Moscone Center, San Francisco, CA

http://code.google.com/events/io/2010/

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2010, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 174 of Linux Gazette, May 2010

Henry's Techno-Musings: My Help System

By Henry Grebler

Preamble

If a doctor or an electrician came to your house empty-handed, you'd

be a bit bemused, right? (I suppose, these days, if a doctor came to

your house, you'd be astonished! But let's not get side-tracked.) You

expect the professional and the tradesman to come with tools. How else

is she going to do her job?

Yet, in the computer industry, people show up on their first day at

work in a suit. And that's it. And, sometimes, not in a suit.

Strangely, not only are we not expected to bring our own tools, often

the very idea of computer people relying on tools is considered a

deficiency, a weakness. What?! You look phone numbers up in a

directory! What a wuss! A real sysadmin would just memorise the phone

book!

Context

I do not come to work empty-handed; I come with a large toolkit. Most

of what I know fits into several classes. It also fits on a CD.

These are the components of my toolkit:

- HAL - Henry Abstraction Layer

- Muscle memory

- Help System

- History files

This article is about the third of these.

Motivation

Someone comes to me and asks, "What do you know about SAN, Henry?"

"I don't know," I reply. "Let's have a look."

help san

I am presented with help on the Sandringham train timetable and

nuisance calls, in addition to quite a lot of practical information on

SAN accumulated over many years.

My help command is analogous to something which is a cross

between apropos and some implementations of man. It

has the wildcarding properties of apropos, but gives full

answers like man rather than the headings returned by

apropos.

It's different from man and complementary. man

contains reference information; the information returned by

help is more like what you might find in a HOWTO or a

quick-start guide. man tends to contain complete information

on a topic; the information returned by help is often

fragmentary, incomplete and very basic.

Unlike man which is purely technical and only for the machine

I'm on, help is not restricted to a single platform; nor is

it restricted to technical matters. People who know me might be

shocked to learn that I have help on Microsoft offerings. I also have

help on how to use my bank accounts.

Invoking help

To use my help command, I type

help string

where

string is any string which I think might be

relevant. For example,

help rpm

should give me help on

rpm.

My help directory

My help directory is $HOME/help. I was not

very consistent at first. Arguably, I'm still not. From what I can

see, my earliest entry is dated 14 Aug 1992.

These days, I work like this. Each entry is a file. Each file that I

want to be able to look up has help_ as the first

part of its filename. There may have been a reason for this, though,

honestly, I don't recall. Perhaps I thought there could be other

entries (non-text files like PDFs) that I would access some other way.

I also had subdirectories. I guess I did not want them "getting in the

way" when I was looking stuff up (so their names never began with

help_). I don't use subdirectories very often these

days; I can't recall the last time I did.

There's a subdir called FIREFOX which contains some

html files; another called HTML which includes

Quick_HTML_Reference.html; another called

fedora-install-guide-en_US. But these subdirs are on

the periphery of my help system.

The main game is the body of help_* files.

The "rules" are very simple:

My help command

My

help command is implemented as a

bash function

defined in one of my many startup scripts (which form part of my

HAL).

function help {

# Assume no arg means standard bash help

if [ "$1" = "" ]

then

builtin help

return

fi

echo 'For bash help, use builtin help'

# avoid backup files:

(set -x; less `ls ~/help/help_*${1}* | grep -v '~$'`)

if [ $? -ne 0 ]

then # try for bash help

builtin help $1

fi

}

The first few lines deal with the fact that I have appropriated

bash's builtin help command. So, if I just type

help, I get bash's help.

The line with the parentheses does the work. I'll come back to it.

The last 4 lines are supposed to help me coexist with bash.

In theory, if I don't have help on the subject in my database, maybe I

meant the bash command. In writing this up, I have discovered that

this part doesn't work! This supports somebody's law that every

non-trivial program has bugs.

It also supports the notion that something does not have to be perfect

to be extremely useful. "Perfect is the enemy of good", as Ben said

recently.

The main command

less `ls ~/help/help_*${1}* | grep -v '~$'`

Let's start at the inside.

ls ~/help/help_*${1}*

This lists all files in ~/help

(ie $HOME/help) which start with help_

and have the specified string somewhere in the rest of the filename.

I use emacs as my editor. It creates backup files which have

tilda (~) as the last character of the filename. I

don't want to see these backup files; the grep removes them

from the list.

The less command then displays the files left in the list,

sometimes one file, often several.

Before I talk about my less command, I'll make one more

comment about the line with the parentheses. It starts with

set -x. Long ago, when I first met Unix (before Linux

existed, I think), I read somewhere that Unix commands do their work

quietly. That isn't how I like to operate; I like lots of

confirmation: this is what I did, this is the command I used, these

are the files on which I operated.

I don't expect others to share my preferences. Those who prefer

silence can omit the set -x.

My 'less' command

Using less on several files would be vastly less useful without a

little wrinkle.

I prefer to use less(1) as my $PAGER. However, standard

less needs a little help. I want to be able to move from one

file to the next and back quickly. By default, less uses

:n and :p for this, but that's 3

keystrokes, and there is no way that 3 keystrokes can achieve what I

want. I guess I could program the function keys to generate

:n and :p,

but there are issues with that.

I prefer to use the LEFTARROW and RIGHTARROW keys. By default, less

uses these to mean "scroll horizontally", but I never want to be able

to scroll horizontally in less, so I appropriate those keys.

I want RIGHTARROW to mean "next file" and LEFTARROW to mean "previous

file".

If you want to do things my way, get hold of my

.lesskey file and either copy it to your home

directory or use it to edit your own .lesskey file -

see lesskey(1). Either way, check what's there: you may not want

everything I have. See below.

Then run lesskey to compile the

.lesskey file into something less can use.

Analysis

In my view this is a wonderful example of appropriate technology. It's

appropriate when all factors are taken into consideration. The most

important factor is the customer. I designed this to be used by me, no

one else. Of course, "design" is a gross overstatement; the mechanism

grew organically.

Most of my interaction with computers is via the command line,

typically running in an xterm. Whenever I can, I use

bash. That's pretty nearly always: bash is the

default shell on Linux (that which we think of as /bin/sh is

really bash); it is now standard (/bin/bash) on

recent versions of Solaris; and is readily available on BSD. When I'm

not typing commands, it's probably because I've invoked emacs.

Whether I'm in emacs or in bash, most of the

commands I use are the same.

So, editing a help file involves one of my bread-and-butter

activities; nothing new to learn.

Probably the next most common thing I do is use less to

browse files. It is my mail reader of choice (unless I absolutely

can't use less). Consequently, reading my help files also

involves one of my bread-and-butter activities.

Most importantly, it's wonderfully portable. I can take the whole help

directory (and other stuff) on a CD wherever I go and copy it to local

disk in no time.

The system is deliberately as unstructured as possible. There is a

danger that if I make entering information too onerous, I won't do it.

In many organisations, generally helpful information must be entered

in some markup language. If I have to organise my help information at

the time I'm recording it, I'm going to have to suspend doing whatever

task I'm working on. That won't do.

Typically, I just swipe and paste text from where I'm working into a

file. I'm just creating aids for myself - the equivalent of rough

notes one might write on a scrap of paper.

If, some time later, I return to a help file because I'm working in

the same area, I might clean the information a little. Or not.

The major purpose is to save time. If I spend a long time trying to

work out how to do something, I don't want to have to repeat that

exercise in the future.

Of course I'm human and fallible. Sometimes I'm so happy that I got

the thing to work, I just jump in and use it; I forget to record

useful information. But, hopefully, I'll catch it next time.

Typical use

Let's take an example. I've recently moved to FreeBSD. I'm a bit of a

newbie. Let's see what I feel I need to know.

ls -lat $HOME/help/help_*bsd* | grep -v '~' | cut -c 30-

3897 Mar 21 07:35 /home/henryg/help/help_freew_bsd_diary

3030 Mar 19 14:50 /home/henryg/help/help_bsd_build_notes

100 Mar 19 11:12 /home/henryg/help/help_bsd

113 Mar 17 16:38 /home/henryg/help/help_bsd_admin

261 Mar 17 10:37 /home/henryg/help/help_bsd_build

So when I go help bsd, the effect is to load the

above 5 files into less. Because I can use the left- and

right-arrow keys to navigate from one file to the next, and because

these keys auto-repeat, I can get to any file very quickly. Since I

put this stuff in, it follows that I know what's there (more or less).

The way my brain works these days, I'm much better at recognising and

selecting than I am at recalling.

If I'm looking at a file with less, and I decide I want to

modify the file in some way, I can simply type v and

I'm in emacs ready to edit.

If all else fails, I can grep through the help files since they are

just text files. Or I can list the help directory to see if any of the

filenames trigger recognition.

This rarely happens, but let's say I'm looking for some info on xxx

and help xxx doesn't produce anything useful. I

resort to grep and find the info. Why didn't help

xxx deliver the relevant file? I realise that I have

"misfiled". For instance, I stored some info in

help_audio but I subsequently look for it with

help sound. Ok, simple solution:

mv help_audio help_audio_sound

I can now find the relevant help by using either string (or a

substring). For instance, either of these commands will deliver the

above file (and possibly others):

help aud

help soun

I can "file" some information under a dozen categories (strings)

virtually for free. I simply need to include more items in the name of

the help file.

The contents of help files

Please remember that the information in a help file is what I believe

will be useful to me at some time in the future. If you are interested

in such a mechanism for yourself, I expect you to put into your help

files the sort of information that you will find useful. That could be

quite different.

Just so you get the flavour, here's the first part of

help_rpm:

### For complicated stuff see help_rpm2

### For Uninstall see help_rpm_uninstall

------------------------------------------------------------------------

RPM=wxpythongtk-2.4.2.4-1.rhfc2.nr.i386.rpm

rpm --query -p $RPM -i

rpm --query -p $RPM -l | cut -d / -f1-3 | uniq | head

rpm --query -p $RPM -l | wc

rpm --query -p $RPM -l

# Test Install

# rpm -i --test $RPM # Use the long option

rpm --install --test $RPM

# Really install

# rpm -i $RPM # Use the long option

rpm --install $RPM

++++++++++++++++++++++++++++++++++++++++++++++++

Tough installs for extreme situations:

rpm -i $RPM --force

rpm -i $RPM --nodeps # Don't check dependencies

Typically, to perform an install, I set the variable

RPM, then copy and paste each of the

rpm commands into an xterm. If I want, I can pretty

much turn my brain off and run on autopilot.

More importantly, it saves me a lot of typing; it minimises typos; and

it allows me to concentrate on more global matters, not the mechanics

of an install.

One more example: here's help on the string pub.

(Yours might tell you about watering holes.) I have two help files

concerned with public transport:

help_public_transport and

help_aaa_public_transport. I created the first one

first. It has some detailed information on bus routes. It's the one I

usually don't want to see. So, when I created the second file I put

aaa at the start of the string so that it will always

show as the first file in less (because of the way

ls works).

When I want to go somewhere by public transport, I visit Viclink

Journey Planner. It's a marvellous resource, but it can be a

little too general at times. To get answers more quickly, it helps to

provide detailed strings, strings that I would never bother to

remember and could probably never type correctly. So my help shows me

the strings I need for the stations I frequent:

Gardenvale Railway Station (Brighton)

Ormond Railway Station (Ormond)

Caulfield Railway Station (Caulfield East)

Malvern Railway Station (Malvern)

Armadale Railway Station (Armadale)

Southern Cross Railway Station (Melbourne City)

Richmond Railway Station (Richmond)

Flinders Street Railway Station (Melbourne City)

Melbourne Central Railway Station (Melbourne City)

I just paste the relevant strings into the form and get answers very

quickly.

Extensions

After many years working with one organisation, recently I've been

contracting to different companies. I am just in the process of

working out how I want to handle this. I'm thinking that the stuff I

gather while working at X Corp could go into directory

help_X_Corp and the Y Corp stuff could go into

help_Y_Corp. I started doing something along these

lines at my last contract.

I'm leaning towards formalising this, using a modified form of the

help command. The key line is:

v

less `ls ~/help*/help_*${1}* | grep -v '~$'`

^

I've added an extra asterisk to wildcard across ALL help directories.

In Conclusion

That's it in a nutshell. Anyone can adapt what I've done to suit

him/herself. That's the beauty of computers and especially open source

software: near infinite flexibility and customisation. It's such a

shame that we waste the power on "look and feel" (skins and themes).

"Off the rack" fits most people reasonably well without fitting anyone

perfectly. But anyone can adapt a system like this to suit as closely

as desired - with enough effort.

It doesn't matter if you are a computer nerd or a neophyte.

It doesn't really matter what OS you use (though Microsoft makes it

harder). You need an editor and some way to select and display files.

The only difficulty I can see is if your only access is through GUIs,

because then you have to write (or get someone to write) the selection

code (and maybe the display code). I guess the Microsoft way is to

also use the editor (say Wordpad) as the display program. I'm not real

keen on that, but others might prefer it.

If I'd wanted that functionality, I could have used emacs in place

of less. I think I would have lost a lot if I'd done that.

Postscript

I wrote the above a few days ago. The next day, pricked by some of

the points I made, I modified my help command. I've included it in

Resources below.

Resources

# .lesskey - less keybinding file

# Henry Grebler 26 May 98 Add alternative left-scroll.

# Henry Grebler 26 May 97 First cut.

#=============================================================================#

# Note on comments:

# Blank lines and lines which start with a pound sign (#) are

# ignored, except for the special section header lines.

#=============================================================================#

# Note on usage:

# This file is the input file to lesskey. Without args, lesskey

# processes $HOME/.lesskey (a text file) to produce $HOME/.less (a

# binary file (which is the init file for less)).

#=============================================================================#

x quit

X quit

\e[C next-file

\e[D prev-file

\eOC next-file

\eOD prev-file

# By default, \e[ is left-scroll. However, the inclusion of \e[C above

# seems to have disabled \e[ (sort of understandable). So I add \e{ as

# a synonym for left-scroll (and add \e} as a synonym for right-scroll

# as an afterthought for consistency).

# \e{ left-scroll

# \e} right-scroll

My latest help command:

function help {

# Assume no arg means standard help

if [ "$1" = "" ]

then

builtin help

return

fi

echo 'For bash help, use builtin help'

# avoid backup files:

files=`ls ~/help*/help_*${1}* 2>/dev/null | grep -v "~$"`

if [ "$files" = '' ]

then # no matches: try for bash help

builtin help $1

return

fi

echo "$files"

echo 'ls ~/help*/help_*'${1}'* | grep -v "~$"'

less $files

}

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry has spent his days working with computers, mostly for computer

manufacturers or software developers. His early computer experience

includes relics such as punch cards, paper tape and mag tape. It is

his darkest secret that he has been paid to do the sorts of things he

would have paid money to be allowed to do. Just don't tell any of his

employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

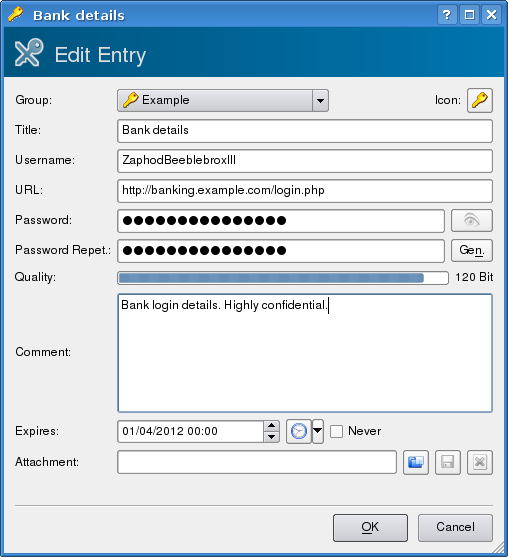

unless otherwise noted in the body of the article. Linux Gazette is not